Virtual Reality applications in the cloud

InfraVis User

Shamit Soneji

InfraVis Application Experts

Mattias Wallergård, Anders Follin, Günter Alce, Joakim Eriksson, Björn Landfeldt

InfraVis Node Coordinator

Anders Sjöström, Emanuel Larsson

Tools & Skills

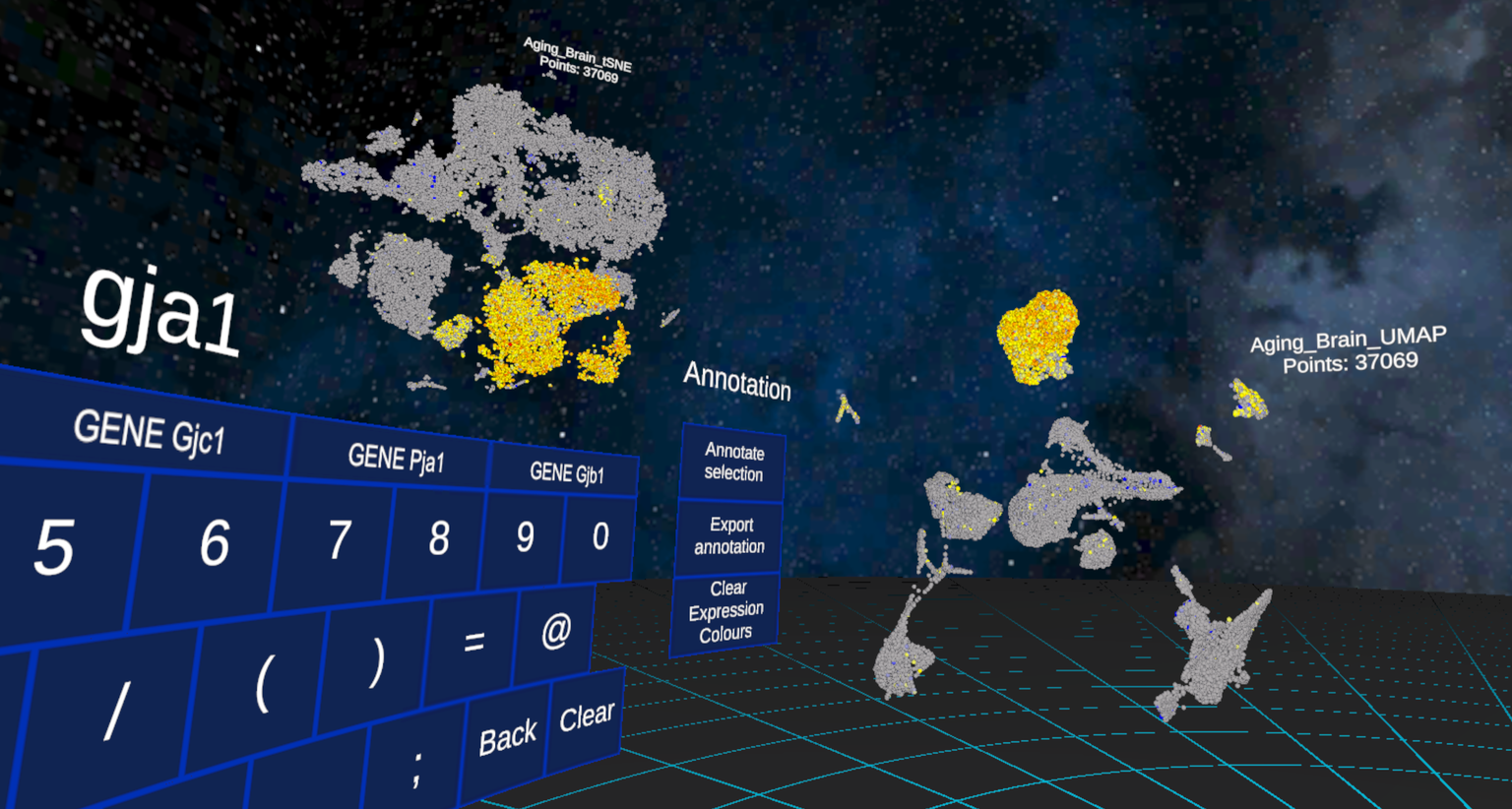

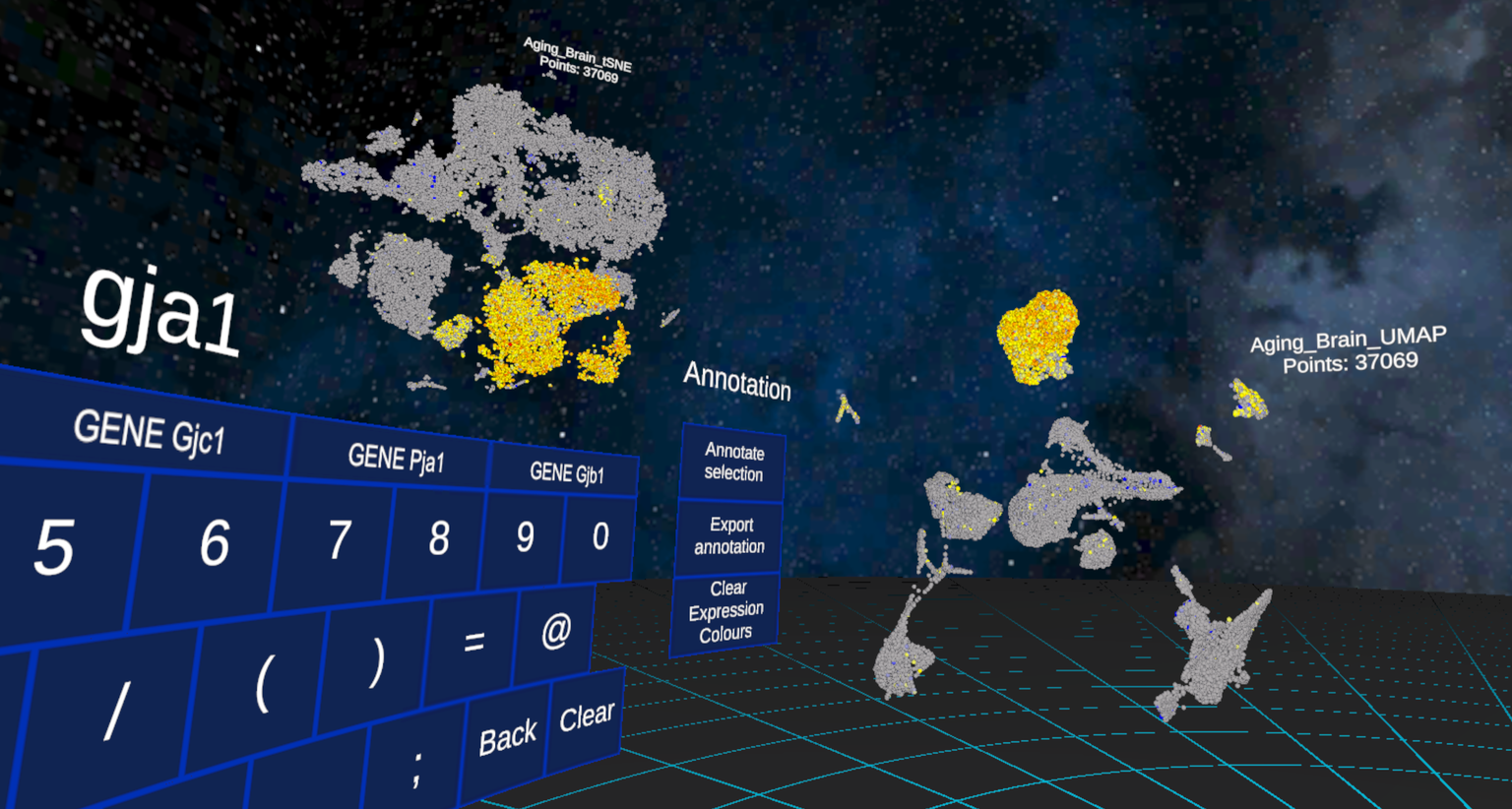

Virtual Reality, Distributed Virtual Reality, CellexalVR

About

The project aims at designing an infrastructure that supports VR applications in the cloud using 5G/6G.

Outcomes:

- Reduced requirements for expensive and immobile hardware setups to deliver access to complex applications such as CellexalVR.

- Visualization and manipulation of large datasets through VR.

Background

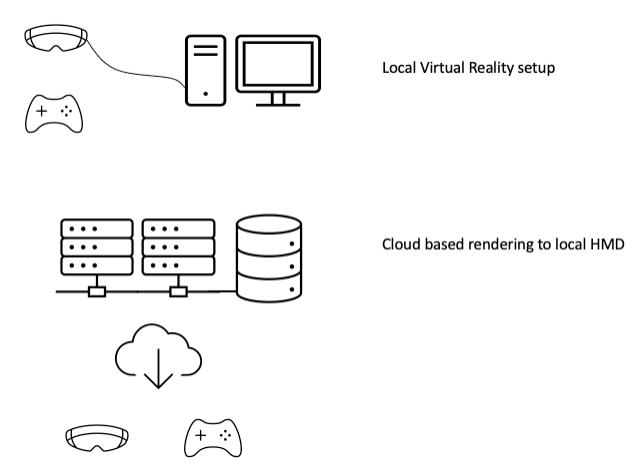

Virtual reality is a visualization technique enabling an immersive viewing experience with gesture based interaction typically using handheld controlling devices. A head mounted display (HMD) is the most common display device used where each eye receives its own image using different viewing frustums to achieve stereoscopic viewing.

Typically the hardware setup consists of a powerful graphics workstation, a tethered HMD, equipment for tracking e.g. light-houses and hand-held controllers. This in not only expensive but also immobile and limits the achieved performance to the hardware resources available in the local workstation. Offloading the compute and rendering process to cloud based resources remotely connected to local tracking devices and HMD opens up new possibilities for a distributed scientific workflow without need for local beefy workstations with limited performance. Moving virtual reality compute and rendering to powerful cloud-based resources facilitates resource sharing and enables high performance storage, parallel compute and high-end visualization.

At Lund University a collaborative effort between the VR Lab, LUNARC supercomputer center, the Computational Genomics group at Lund Stem Cell Center and Electrical and Information Technology has developed a prototype system showcasing the possibilities.

The research group at Lund Stem Cell Center expressed an interest in pursuing possibilities of enabling remote rendering and compute capabilities to their VR-application for genome analysis (https://cellexalvr.med.lu.se/). Since the intended user base are laboratory researchers with no or limited access to local compute and graphics hardware, remoting VR from the cloud could open new possibilities without the need for expensive and technically challenging local options. Lund University Virtual Reality laboratory and supercomputer center LUNARC became the perfect fit and ideal collaborators for a proof-of-concept setup.

The VR Lab at Lund University is an infrastructure for research and innovation utilizing VR and AR technology for a wide range of applications. It offers a broad spectrum of hardware, from large immersive VR systems to wearable AR and various types of interaction e.g., eye tracking and haptics.

LUNARC is Lund university’s local supercomputer center providing extensive compute and graphics resources to the university. LUNARC also has a heritage in visualization, developing end-to-end solutions for its user base. One example is the LUNARC HPC-desktop providing hardware accelerated 2D and 3D applications to end users. Since the graphics nodes are an integrated part of the compute infrastructure including fast parallel storage and high-speed networking techniques such as computational steering and customized workflows are possible and already implemented for certain user groups and scenarios. Expanding this concept to VR seems like a natural next step.

During the course of the work it became clear that deep knowledge within the field of wireless communication was missing. For this reason, Electrical and Information Technology, Faculty of engineering, Lund university was contacted. The research group has a huge interest of use cases for 5G/6G/Edge within the field of XR and therefore agreed to contribute to the pilot project.

Proof of Concept

CellexalVR was the ideal test application due to the applications nature and need for a more flexible setup. Since the developers obviously have an in-depth understanding of their application and how it behaves running on different VR-setups it was an appropriate bench.

The application was installed at LUNARC supercomputer centre, local VR-devices and experience in virtual reality was provided by the VR Lab. Nvidia CloudXR API was choosen to distribute VR content (L/R eye pair) and receive corresponding tracking updates from client HMD and hand-controllers.

Server-side components include a graphics node with dual high-end CPUs, 512GB RAM and Nvidia A40 GPU connected to the compute and storage infrastructure using HDR Infiniband and 25Gb Ethernet. During the initial tests the rendered VR-stream was received by a local workstation using 1Gb Ethernet connected to HTV Vive and Meta Quest 2 HMDs using a tethered setup.

Results

Initial tests running across the campus network from the LUNARC datacentre to a local client did work but the experience was rather erratic with intermittent frame drops and synchronization issues w.r.t to tracking. Our hypothesis was that the somehow unknown network topology e.g., number of switch hops, etc. was the main reason for this behaviour. The obvious approach in order to minimize the number of unknowns was to take the campus network out of the equation by connecting the client directly to the graphics node using a well-defined minimalistic network.

Surprisingly enough a direct connection did not make any significant improvement. Further in-depth investigations and tests discovered a mismatch between certain system drivers and associated hardware preventing the CloudXR API to function correctly. This finding contributed to a steady frame rate at approximately 80Hz and 1ms latency using a direct connection. Returning the client back to the office and streaming across the campus network didn’t change the achieved performance numbers except for a minor variation and increase in latency (net result 1-2 ms) due to actual network conditions.

Next Steps

The POC used a tethered local setup using an HMD connected to a local workstation using USB-C or HDMI depending of HMD used. The receiving component of CloudXR was running on the workstation delivering stereoscopic imagery to the HMD using SteamVR. An interesting nest step would be to build the CRX client for a non-tethered HMD such as Meta Quest 2 to enable wireless communication. This will give the used a larger degree of freedom by removing the cabled connection.

Profiling and possibly parallelizing the VR application and off-loading certain compute intense parts to the compute backend i.e. compute cluster will increase performance for CPU-bound applications.

Another area of interest is computational steering where manual intervening of otherwise autonomous large scale computational processes can be made using VR.

Applications and use-cases involving very large data in combination with demanding computational needs are excellent candidates for remote rendering. This has successfully been in use for a decade using LUNARC HPC desktop and an extension of this technology to immersive VR would be beneficial for certain applications. Some examples are volumetric rendering and CFD analyses of large data sets using VR-enabled applications such as Slicer 3D and Paraview.